A practical step-by-step guide to define AI use-cases

AI Problem Framing Workshop

Why AI Impact Lags Behind AI Investment

AI has become the default topic in every organization. Everyone is trying to understand where it fits, how it will shape their business, and how fast they need to move. Adoption is increasing, investment is increasing, and yet the impact is not.

Most industry reports say the same thing. AI initiatives don’t fail because the models are not ready. They fail because organizations lack the fundamentals: usable data, clear AI use-cases, and the governance required to scale responsibly.

The Core Problem: Undefined AI Use-Cases

This article focuses on one of those fundamentals — the lack of well-defined use-cases. More specifically, the lack of clear problem definitions where AI, and especially LLMs, could deliver real value: reducing costs, improving processes, growing revenue, or elevating the customer experience.

Introducing AI Problem Framing

What follows is a battle-tested method — AI Problem Framing — that helps teams answer two questions every organization is wrestling with:

What should we build with AI? What are our AI use-cases?

This is not a deep dive into the entire method (you can find that in my partner’s article, Problem Framing 101). Instead, the goal here is to show how to put it into practice. Many teams understand the principles of defining a problem, but they struggle when they try to apply them inside the reality of their organization.

And this is where the most important point needs to be stated clearly:

This is a workshop. Not a meeting, not a 6-week process, and definitely not a brainstorming session. A workshop.

Why Problem Framing Works Only as a Workshop

You can do pieces of the method in other ways — and many teams already do — but packaging them as a workshop is essential. There are three reasons for this:

- AI is cross-functional by nature. It touches business, technology, product, legal, operations, and of course users. No single person has the full picture.

- AI requires alignment. AI initiatives fail when everyone holds a different opinion or priority. Alignment cannot be assumed or delegated.

- You need executive buy-in. Without decision-makers in the room, your AI project risks becoming another shiny experiment in the growing graveyard of AI pilots.

Trying to do this the “business as usual” way — a sequence of 1:1 conversations, scattered meetings, Slack and email threads, and slide decks — slows everything down. It creates friction, it dilutes ownership, and it kills momentum. That approach might have been tolerable before, but it does not match the speed at which AI is moving today.

A well-prepared, well-run workshop gives you what the old way cannot: everyone in the same room, looking at the same information, making decisions together. In hours, not weeks. And in the age of AI, that is the only pace that works.

Let that sink in.

Why spend months on something you can resolve in a single day?

Now that the workshop format is clear, let’s look at who it is for before we dive into how it works.

WHO THE WORKSHOP IS FOR

We designed the AI Problem Framing Workshop for any team that is being asked to “do something with AI” without a clear mandate. It is especially useful in large organizations, where collaboration is fragmented, alignment is rare, and decision-making is slow.

It works equally well for organizations taking their first steps in AI and for those looking to scale a mature AI innovation practice. If you have 300 AI ideas and need to prioritize the ones that matter, it works. If you need to identify and define a single use-case from scratch, it works just as well.

At Design Sprint Academy we run these workshops with product, innovation, and design teams because they often drive AI adoption. For example, at the World Bank we trained the IT Group, at HSBC we worked with the global design team, and at Turner Construction - the largest builder in the U.S. - we collaborated with their innovation team.

The workshop also serves individual consultants: AI coaches, AI strategists, and facilitators - who want to guide clients through AI in a more structured, strategic way. It gives them a format that leads to tangible outcomes without needing a 100-page report or a 6-week consulting project.

Now let’s look at how the workshop actually works: the sequence of steps we use with our corporate clients, and how these steps help you define high-value AI and LLM use-cases with confidence.

.png)

THE ROLES YOU NEED IN THE ROOM

Before anything else, you need the right people in the room. This single decision will make or break the workshop. All roles matter, but two are non-negotiable: an AI technical expert and a Decider (senior executive, sponsor, or someone with clear authority).

Why?

It is unrealistic to expect a group of people who only know AI as users — even power users — to define solid use-cases. Without a technical expert, teams don’t understand the capabilities, risks, implementation constraints, or feasibility tradeoffs involved. As one of our partners, an AI implementation firm, put it:

“People cannot even imagine what AI can do.”

The Decider is equally critical. Their role is to ensure decisions align with business goals and to remove obstacles for implementation. Without them, the workshop produces ideas but no commitment.

The rest of the team is just as important: domain experts, frontline employees, operations, support teams — the people who experience broken workflows every day, who hear customer complaints, and who already know where the real problems are. Bringing this mix together — business leaders, domain experts, and technical experts — is what reveals the most valuable AI opportunities.

Read more about how to assemble the AI Problem Framing team in Dana's article: Why AI initiatives need AI Discovery Pods

Why You Need a Neutral AI Facilitator

There is one more role worth mentioning: the person conducting the workshop. An AI Facilitator is best suited for this, and I’ve written a separate article about what that role involves. For the purpose of this guide, the key point is simple: the workshop needs a neutral facilitator — someone who can guide the group, keep the flow, and make sure every voice is heard.

This requires structure. With the steps below, anyone who has ever run a meeting can facilitate the workshop effectively, as long as they stay neutral and follow the process.

Finally, the workshop recipe / agenda. Depending on your context, scope, pre-work done, the workshop can take anything from half-day to a day. Here’s the breakdown of how the AI Problem Framing workshop unfolds:

The AI Problem Framing Workshop Structure

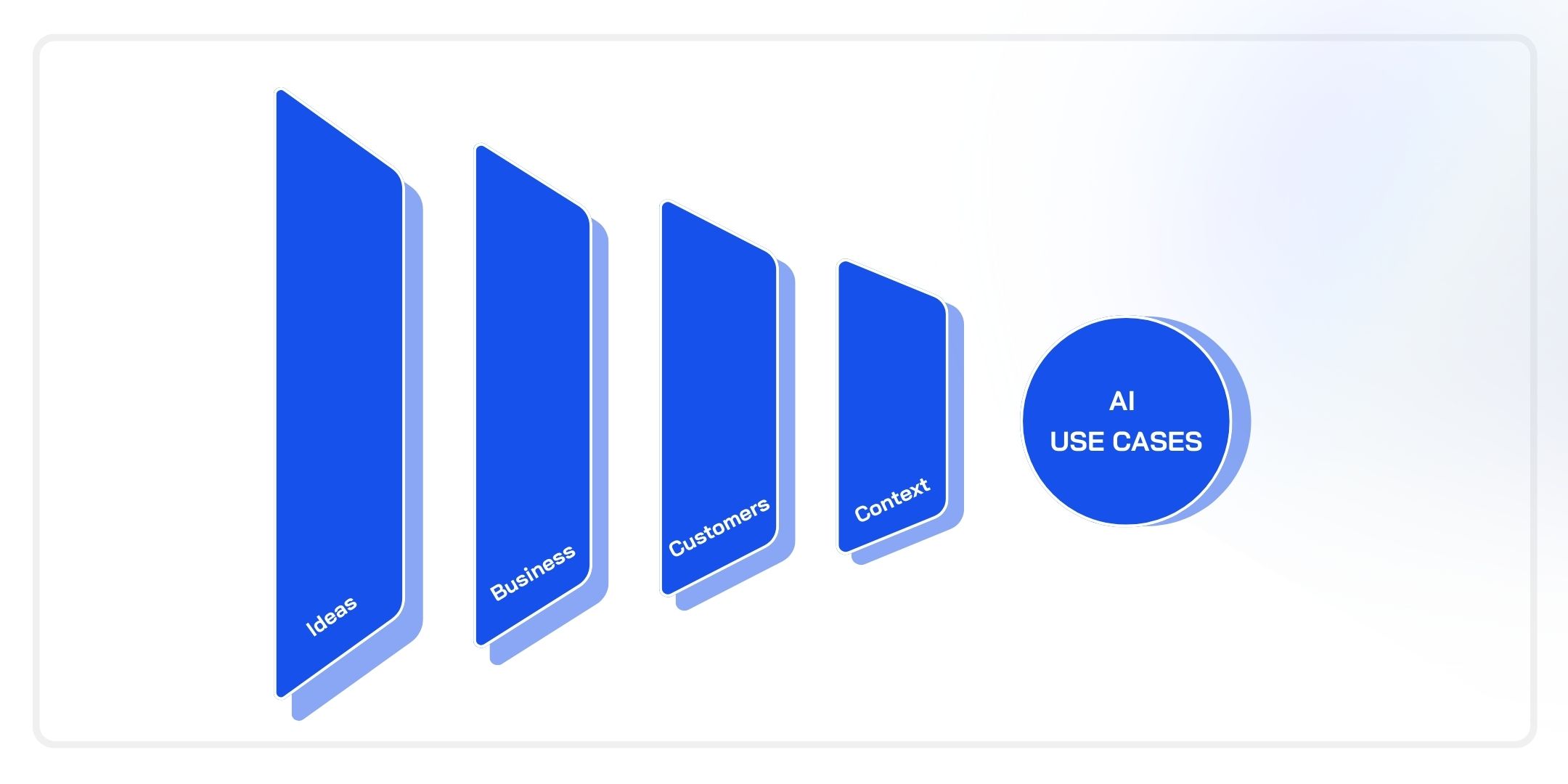

Step 1. Start With the Reality You Already Have: AI Ideas

Every organization already has AI ideas floating around — mandates from leadership, backlog items, things people have seen in the wild or even early AI concepts. Starting with solutions may feel counterintuitive in a problem-framing workshop, but with AI it’s unavoidable. People already have ideas, and it’s better to surface them than pretend they don’t exist.

At this stage, we let everything onto the table. We don’t judge feasibility, value, or alignment. We simply make the implicit explicit. It also energizes the team — this is often the first moment where they see the full picture of what everyone else is thinking.

Pre-workshop:

Collect as many existing AI ideas as possible from participants and stakeholders, and print them on cards. You can still gather ideas in the room, but doing this upfront saves time and ensures nothing gets lost.

Step 2. Business Goals

Next, we anchor the ideas in the business context. The team reviews existing goals, OKRs, and KPIs, and identifies what is currently blocking progress. Only then do we map the AI ideas to those blockers.

The point is simple. AI only matters if it moves a real business goal.

This step filters out noise early. Ideas that looked promising become irrelevant once the team realizes they don’t support any priority. Others suddenly gain importance.

By the end of this block, the team has a shared view of what the business cares about and how the current AI ideas map — or do not map — to those goals. Everyone feels heard, and a first round of prioritization has already happened. No frustration, no arguments — the alignment emerges naturally because the criteria are objective.

Pre-workshop:

Gather all relevant business goals and KPIs tied to the team, initiative, or broader AI context. Bring them into the room as printed cards so everyone is working from the same information.

Step 3. Customer

At this point, the team has a list of potential AI ideas mapped to business goals. Some of these ideas may already be grounded in customer problems, but most will be business-driven. That’s normal. Now that the business context is clear, the team needs to shift perspective and ask a different question:

Which customer problems are we actually trying to solve?

Here, “customer” can mean an external user buying your product or an internal user — a stakeholder or employee — depending on whether the focus is on customer experience or internal processes.

We don’t treat the customer as a broad, abstract group. We define the Minimum Viable Segment (MVS): the smallest group worth solving for first, but still meaningful enough to make an AI solution viable/ profitable. The MVS gives the team focus and forces specificity.

From here, the team uncovers the concrete needs and problems of this segment that can be potentially solved with AI. This is where real insights surface. Problems that looked big are not actually top-of-mind or problems that seemed small turn out to be deal-breakers.

This prevents the team from chasing “AI ideas” that don’t matter for customers.

Pre-workshop:

If your organization already has customer profiles, personas, or research, bring that material into the workshop. It will make the customer prioritization faster and reduce assumptions, grounding the discussion in existing insights and data.

Step 4. Context

Problems don’t exist in isolation. They appear inside workflows, systems, and real customer experiences — and the context is critical to both understanding and solving them. That’s what happens in this stage. The team maps the actual experience, either as a Customer Journey Map or a Service Blueprint, depending on whether the problem is external or internal.

The goal is not a perfect diagram. The goal is a shared understanding of:

- what the user actually does,

- where the process breaks,

- where decisions are made,

- where delays or inconsistencies appear,

- and what the desired outcomes are.

This step forms the foundation for defining AI use-cases. You cannot define a good use-case without understanding the underlying work. Throwing AI at a broken process only amplifies the dysfunction.

Once the map is clear, the team turns friction points and desired outcomes into “What if…?” questions — quick hypotheses about where AI could help.

Examples:

- What if we could predict this step before it becomes a problem?

- What if we automated part of this workflow?

- What if frontline staff had real-time insights here?

These ideas are placed directly onto the map. Patterns start to emerge — clusters around decision points, manual tasks, high-volume content work, or places where people compensate for broken processes.

The result is a set of structured, specific AI opportunities grounded in real workflows and real customer experiences. These can be added to the ideas surfaced earlier and prioritized against business needs. The difference is that this batch is rooted in actual insights, not assumptions.

Pre-workshop:

If your organization already has research, journey maps, or service blueprints, bring them into the workshop. This speeds up the process and ensures you’re working from an accurate current state. Ideally, a draft map should be prepared before the session to save time and give the team a head start.

Step 5. AI Use Cases

The next step is prioritization — both the AI ideas that survived the business filter in Step 2 and the opportunities that emerged from the context mapping in Step 4. The goal is to evaluate everything in a way that is simple, fast, and grounded in business reality.

Each opportunity is evaluated through four lenses:

- Growth: Will it unlock new value or scale impact?

- Pragmatic: Can we implement this realistically in the near term?

- Money: Does it reduce cost, increase margin, or drive revenue?

- Data: Do we have — or can we get — the data needed to make this work?

This is where alignment happens.

People stop debating opinions and start discussing evidence.

And this is why having the right people in the room matters — you need technical, business, operational, and customer perspectives to evaluate ideas objectively.

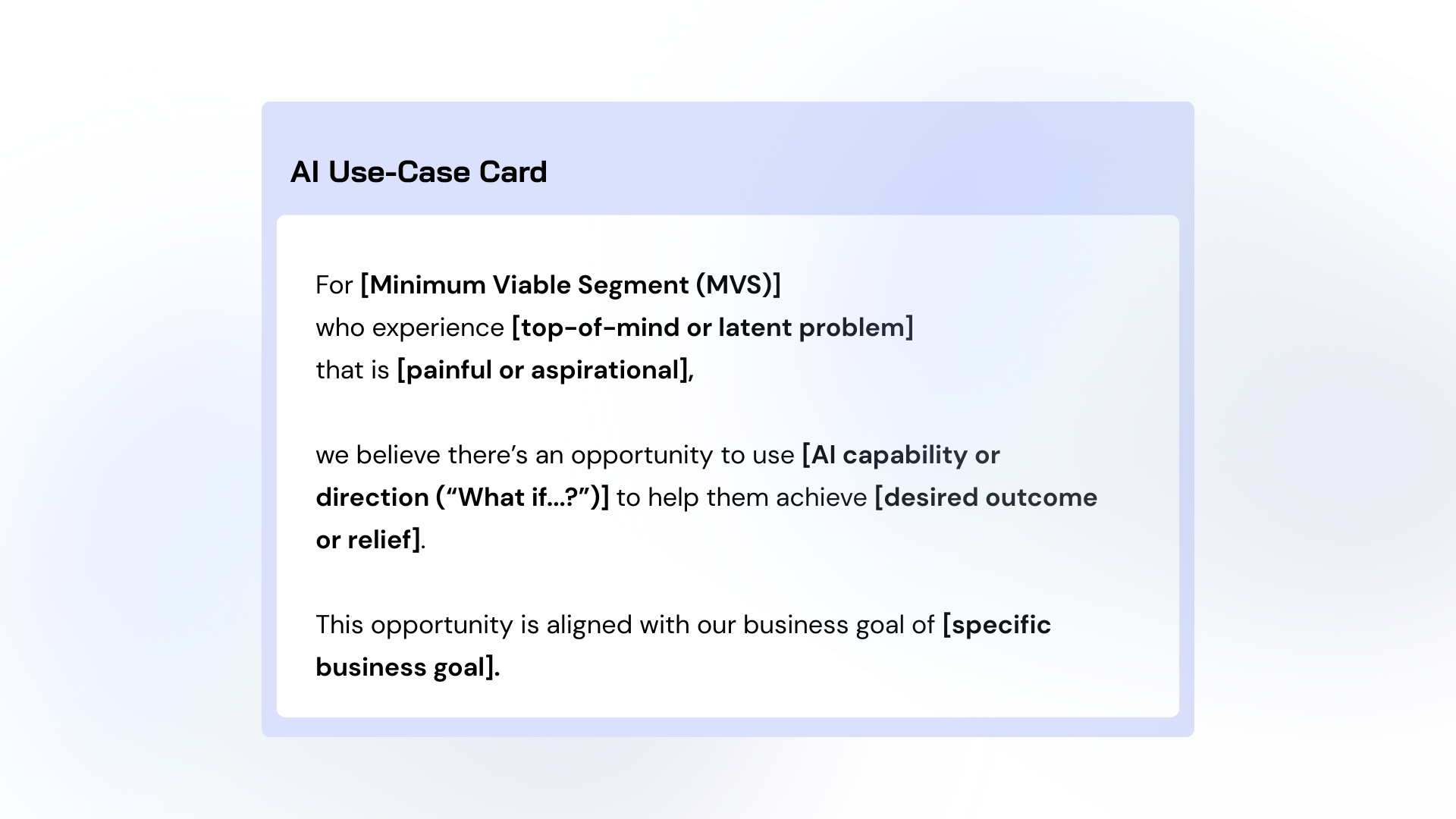

After reviewing the ideas through the four lenses, the team narrows the list to a handful of opportunities (typically three to five). These are then turned into clear, well-framed AI Use-Case Cards.

Each card includes:

- the target MVS,

- the problem and friction point,

- the desired outcome,

- the AI intervention,

- the value it creates,

- and the business goal it supports.

This is the moment where everything comes together.

A vague ambition like “Do something with AI” becomes:

A defined, feasible AI/LLM use-case with a clear user focus, a real problem, and measurable value.

Leadership can sign off.

Teams can start working.

Depending on the level of risk, they can begin building or move into validation.

And that’s an important distinction. Some use-cases will be grounded in solid data and clear insights; others will rest on assumptions. The former can move straight into implementation. The latter require validation — and the best next step is often the AI Design Sprint, a focused workshop for testing whether the solution actually works.

At the end of the day, AI Problem Framing doesn’t only define and prioritize use-cases. It also reveals blind spots — what the team knows versus what they think they know. That clarity alone is a win. It prevents teams from chasing ideas with little potential and encourages them to close knowledge gaps before investing months into unvalidated assumptions.

If you want to start running AI Problem Framing Workshops, you don’t need to reverse-engineer the method from this article. We’ve built an AI Facilitator Stack that gives you everything you need to run the workshop tomorrow. The facilitation slides guide the group through each step. The playbook teaches you the method in detail so you understand the thinking behind the exercises. And the agenda keeps the session on track. It’s the exact stack we use when we run AI Problem Framing Workshops with our clients across the world.

.png)

The Strategic Side of the AI Problem Framing Workshop

A single workshop can create powerful clarity, but the real impact comes when the effect is repeated and scaled. One workshop is helpful. Many workshops, embedded across teams, change how an organization thinks. One flower does not make spring — and one Problem Framing session does not make an AI strategy.

What excites me most is not the workshop itself, but what happens when teams across an organization use the same, simple, repeatable way of defining AI use-cases.

The method is a recipe — a sequence of steps anyone can learn. That makes it scalable. And when you have thousands of employees and hundreds of teams being asked to “do something with AI,” scalability is not optional. You need teams working consistently, using the same structure, the same language, and the same criteria to define problems worth solving.

This is where AI Problem Framing becomes strategic.

Most organizations resist change. They have established workflows, methods, and approval processes. Traditional transformation programs are slow, expensive, and rarely deliver the intended impact. But Problem Framing does not require a transformation initiative. It is plug-and-play. You can embed it inside the work teams already do.

Here are a few examples:

- IT departments and technology partners already have a scoping phase when engaging with internal stakeholders or clients. The Problem Framing workshop fits naturally here — this is how you define the problem before writing a single line of code.

- Strategy consulting Agencies work on defining strategic direction, shaping priorities, and aligning leadership around where to focus next. Problem Framing strengthens these conversations by grounding strategic choices in real problems and evidence instead of opinions or political pressure.

- Product teams already build roadmaps and negotiate priorities with stakeholders. Problem Framing gives them a way to identify high-value opportunities and align stakeholders upfront — solving one of the hardest parts of product management.

- Innovation teams already work on opportunity identification. Problem Framing gives them a consistent, repeatable method instead of ad-hoc workshops or brainstorming.

The point is simple:

AI Problem Framing doesn’t compete with existing processes. It integrates with them.

And while I strongly advocate for dedicated AI Facilitators — because the role is becoming critical for any organization adopting AI — the workshop can also be run by Agile Coaches, Innovation Managers, Product Managers, Designers, and other roles that already guide teams through decision-making.

This is why scale and repetition matter more than a single successful session. Once embedded, Problem Framing becomes a habit — the organizational equivalent of going to the gym. Teams develop a shared way of thinking, a discipline for defining and prioritizing AI use-cases, and a consistent approach to reducing risk and focusing on what matters.

That’s when Problem Framing stops being a workshop and becomes a capability.

.jpg)