Why AI initiatives need AI Discovery Pods

and how an AI Facilitator builds one that works

Here’s the scenario:

A leader announces an AI goal. The brief is vague. A few capable people are told to “figure it out.” They form a team and start building.

Then they hit the wall: nobody agreed on the real problem, the constraints, or how you’ll judge success.

The outcome is familiar: competing priorities, slow approvals, and expensive demos nobody asked for.

An AI discovery pod prevents that—if you treat it as a short unit with one job:

- identify AI use cases worth the investment

- test and validate it with a quick prototype

It isn’t a build team or a shipping team. It’s a week to cut guesswork and make a decision.

This is also where an AI Facilitator matters: they put the right people together, run the week with discipline, and shut the pod down when the work is done.

What kind of Agile pod is this?

Not a permanent product team. Not a delivery squad. Not a culture experiment.

It’s a project-based discovery pod, assembled for 5 focused days:

- Day 1: AI problem framing

- Days 2–5: AI design sprint (build + test AI prototypes)

After day five:

- Results and evidence get handed off

- The pod breaks up

- Production teams take over

This borrows from the idea of small, cross-functional pods used for hard digital problems (Source: Rewired: The McKinsey Guide to Outcompeting in the Age of Digital and AI.), adapted by us for early AI work where speed and shared understanding matter more than scale.

Why AI work needs this setup

AI forces more human-collaboration (never thought I would say that, but it's true). Unlike classic product work, AI initiatives:

- Cut across data, tech, legal, and operations

- Introduce real regulatory and trust risks

- Surface unknowns at different points (data quality, feasibility, ethics)

Trying to handle that in sequence turns into a calendar of meetings that still don’t answer the key questions.

The alternative is simple: get the right people in the room at the same time, working on the same problem, with a clear finish line.

That’s the discovery pod.

The pod exists for discovery and PoC — nothing more

This constraint matters.

The pod’s job isn’t to ship. It’s to:

- Choose AI use cases worth solving

- Build and test prototypes with real users

- Prove (or disprove) desirability before real investment

Decide early with evidence—or don’t build.

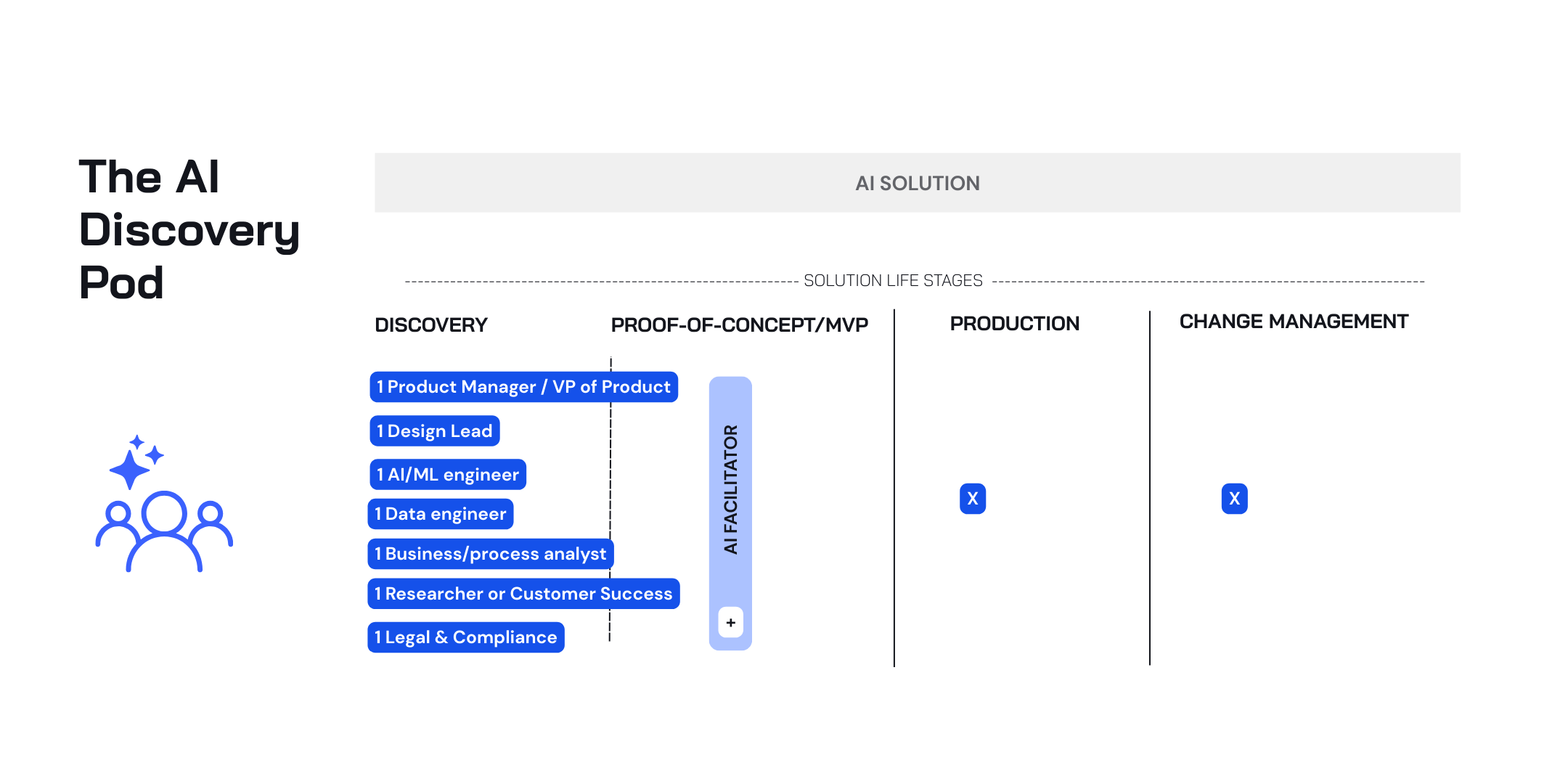

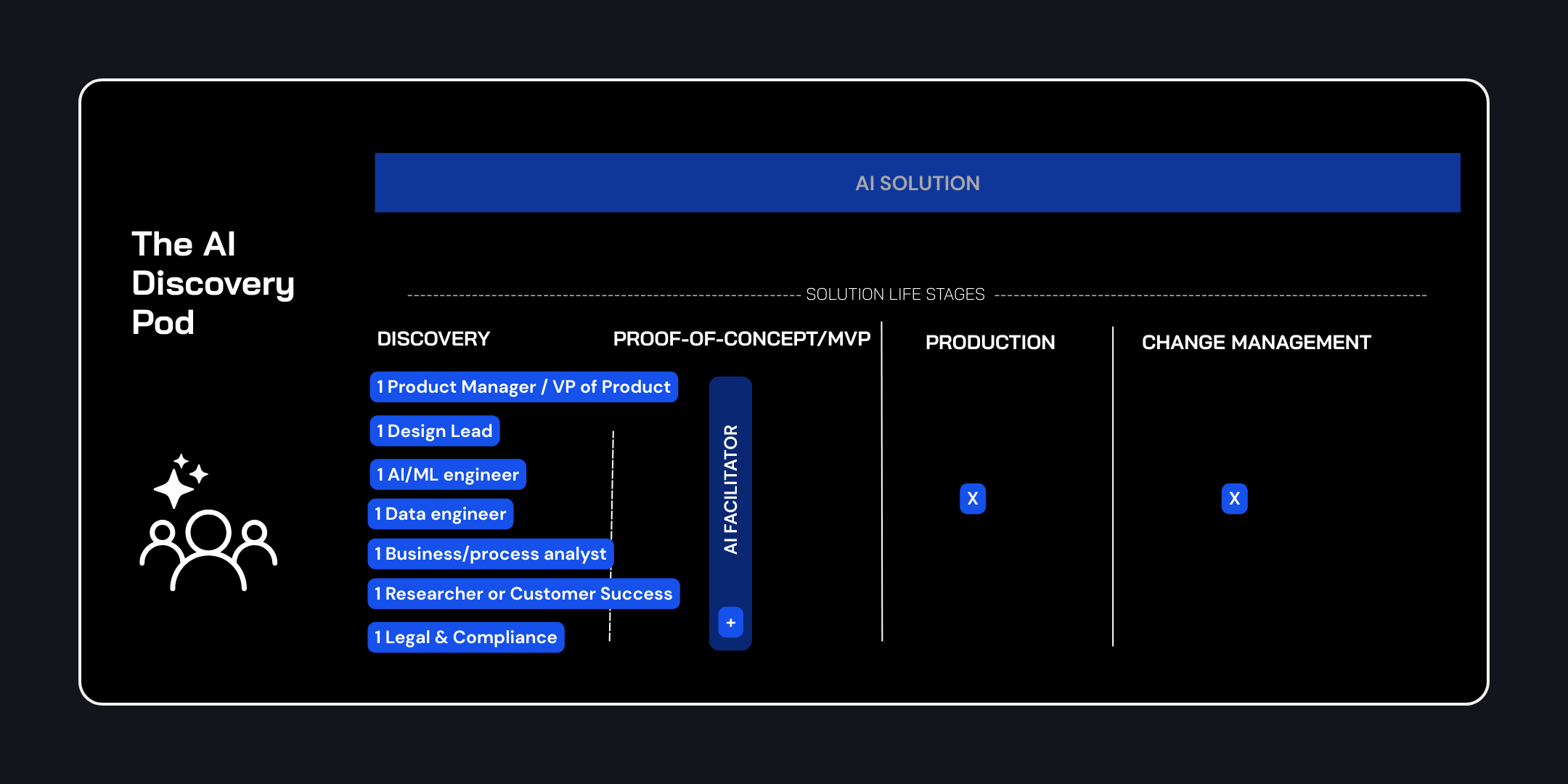

The ideal AI discovery pod

For this stage, a pod usually needs:

- Product manager/VP of Product — owns the decision (will act as a decision-maker)

- Design lead — runs user research, prototypes, and testing

- AI/ML engineer — checks feasibility, model options, limits

- Data engineer — checks data access, quality, pipelines

- Business/process analyst — ties ideas to real workflows and value

- Researcher or customer success — brings user reality into the room

- Legal & compliance — flags constraints and red lines early

- AI Facilitator — runs the week and protects focus

A few rules that matter more than titles:

✅ everyone is fully, 100% committed on workshop days

✅ in‑person beats hybrid at this stage. The team gets together in a physical space.

✅ the group is a mix of backgrounds and thinking styles

This mix works because it creates tension you can use: feasibility meets imagination; risk meets ambition.

What the AI Facilitator owns

The facilitator is there to run the work.

They:

- design the decision flow for the week

- prep the context so the team starts on the same page

- guides the team through the AI Problem Framing and AI Design Sprint stages

- bring in expertise at the right moment instead of letting it flood the room all day

- stop two common failure modes: status battles and AI hype

Most important, they make sure the pod ends with clean outputs and a clean handoff.

Without a facilitator, these pods turn into debates. With one, they turn into a decision engine.

How an AI Facilitator should assemble the pod

1. Start with the type of AI solution

The solution you’re exploring determines the expertise you need.

A generative AI assistant? A decision‑support system? An automation engine?

Each requires different depth in:

- ML vs data engineering

- UX vs workflow design

- Risk and compliance involvement

Generic roles lead to generic outcomes. Pick the roles based on what you’re exploring.

2. Be explicit about the life stage

This pod exists only for two things: Discovery and PoC.

So the goal for the team must be specific:

"Identify AI use cases worth solving and validate them with real users."

If the pod isn’t aligned on this, it will drift into solution-first thinking or architecture arguments.

3. Plan the handover

The pod is temporary. It will be assembled project-based, and disassembled once they figure out the solution.

The AI Facilitator must plan:

- What gets documented

- What assumptions are tested

- What decisions are locked

- What questions remain open

That way, production teams don’t spend weeks reverse‑engineering intent.

4. Use this pod only for the right problems

This is the wrong tool for small backlog items and low‑risk tweaks.

Use a discovery pod for problems that are:

✅ complex and still undefined

✅ high risk (trust, compliance, real operational harm)

✅ strategically important, with real uncertainty

If everything gets a pod, the pod becomes another meeting.

Three bad assumptions you must unlearn

At this point, most organizations think the hard part is logistics: roles, availability, seniority. That stuff matters, but it’s not what sinks the week.

AI discovery pods go sideways because they’re built on false assumptions about leadership, collaboration, and talent—assumptions nobody questions when the pressure is to “do something with AI.”

On paper, the team looks strong. Then ambiguity shows up, and the group stalls.

Here are the myths that come up most when leaders and facilitators assemble AI pods.

Assumption #1: “We just need a strong AI leader”

There is no AI unicorn. One person won’t have the domain context, the data reality, the legal constraints, and the product judgment.

What usually happens instead:

- leadership drops a vague mandate

- the AI lead becomes a bottleneck

- decisions stall

A pod spreads the thinking without losing accountability. The product manager decides. The pod supplies the evidence.

What you do need from leadership is their confidence, their permission to test, and air cover when the first idea fails. Research shows that teams that aren’t exceptional, or even mediocre can still deliver strong work when they believe “we can do this!” - and that belief needs to be reinforced by the leadership team.

Assumption #2: “It takes a village”

No. It takes the right people, at the right time, working deeply together.

You need two kinds of voices in the same room:

- people close to the problem who know the constraints

- people with adjacent angles who can question those constraints

If the room is only problem veterans, you get early too much certainty (in a negative way): “We’ve tried this.”, “That won’t work here.”, “It’s impossible because of X.”

Those reactions can be right—but early on they often shut down the search (or the creative confidence) before any concrete idea forms.

That’s why the pod also needs people with adjacent or different perspectives—inventors, creatives, or thinkers who aren’t trapped by past attempts and will propose options that sound unrealistic or even "crazy" at first.

Early-stage AI work needs that push and pull. Not blind optimism. Not instant shutdown.

Assumption #3: “Only an A‑team will deliver”

High individual talent or IQ doesn’t guarantee a perfect result. Early AI work is uncertain, and uncertainty punishes teams that can’t think together.

Research on collective intelligence (the “c‑factor”) suggests group dynamics can explain up to 40% of the variance in outcomes, separate from the average or max IQ in the room.

In practice:

- an average team can beat an elite one if it listens well and integrates perspectives

- a room full of high‑status experts can fail fast when people defend their turf

This is where the facilitator earns their keep. They set the conditions for the group to think:

- balance voices so junior and non‑technical people speak

- use turn‑taking so airtime doesn’t default to the loudest person

- surface uncertainty early with short check‑ins and fast debriefs

Think of it like networking brains. The connection fails when people can’t read the room or don’t feel safe enough to say what they see.

Final thought

AI discovery is not a test of individual brilliance. It’s a test of whether a small group can learn and decide together under uncertainty.

A temporary discovery pod—built with intent and run well—turns AI ambition into a grounded direction. It also stops weak ideas early.

That alone is worth the cost.

.jpg)