How to build an AI Lab — the only 3 things that matter

Just got appointed Chief AI Officer (CAIO), Head of AI, AI Innovation Director—or a similar role that blends strategy, technical direction, governance, and business fit? Congrats!

If part of your job is to set up and run an AI Lab, this is for you.

But first, let's discuss our context.

If in 2024 the executive mandate was: “We need AI in our business.”

In 2026 the leadership question is: “How do we scale it?”

The external pressure is real

Clients are asking and competitors are shipping.

In many industries, customers now expect AI to show up in the solutions they buy as: tailored experiences, faster cycles, better forecasting, automated service work, and visible cost savings. If you can’t show real execution, you don’t just lose “innovation points.” You lose mandates, market share, and relevance.

But the org's reality hasn’t caught up

Inside the organization, the friction is familiar:

1) Bureaucracy meets efficiency pressure

Legal says: “Not until we have a policy.”

Security says: “Not until we control model access.”

Data says: “Not until we fix readiness.”

Product teams say: “We have 40 use case ideas and no way to choose.”

And that’s by design. Traditional orgs are built for reliable delivery (exploitation), not rapid experimentation. And you still need that delivery engine to keep running while you explore.

But responsible, meaningful experimentation needs a different set of conditions:

- without disrupting core work

- without exposing sensitive data

- without governance showing up only at the end

That’s why the demand for a structured, safe space is growing.

That safe space is the AI Lab.

AI is moving faster than most organizational structures can absorb. So we’re seeing a rise in dedicated AI Practices, AI Labs, AI Studios, and Centers of Excellence—from banks to manufacturers to global agencies.

A real example: Anthropic Labs

That’s the point of an AI Lab: not as a side project, but as an organizational adaptation to AI speed.

2) The gap that slows everything down

And then there’s the gap that slows everything down: not a shortage of AI engineers, Data scientists, ML engineers, but a shortage of connective tissue. People who can translate: business outcomes → AI feasibility → workflow adoption → responsible deployment

Because in 2026, the people who used to play that role are drifting away from it.

Product managers—historically the bridge between strategy and execution—are under pressure to become “AI PMs.” In practice, that often means investing in building: AI agents, prototypes, model features. It’s the hype now. Everyone is doing it. It feels concrete. It feels safe.

Designers are feeling the same shift. Many are learning to build end-to-end AI products to stay indispensable as design work gets redefined.

This upskilling isn’t wrong. But it creates a vacuum.

Everyone is busy learning how to build AI solutions. Almost no one is leading the cross-functional work of deciding what should be built, why it matters, how success will be judged, and what risks must be managed

That’s when AI swings into two extremes:

- AI theater: demos, pilots, excitement—no adoption

- AI lockdown: governance panic, restrictions—no progress

This is why AI Facilitators are emerging as a critical role - to close that gap. They create alignment, speed, and accountability—so AI becomes a real operational capability.

.png)

What a good AI Lab is

The goal isn’t to run experiments for their own sake. The goal is a repeatable way to do three things at once:

- move fast without going off the rails

- prove value without overbuilding

- scale adoption without losing governance

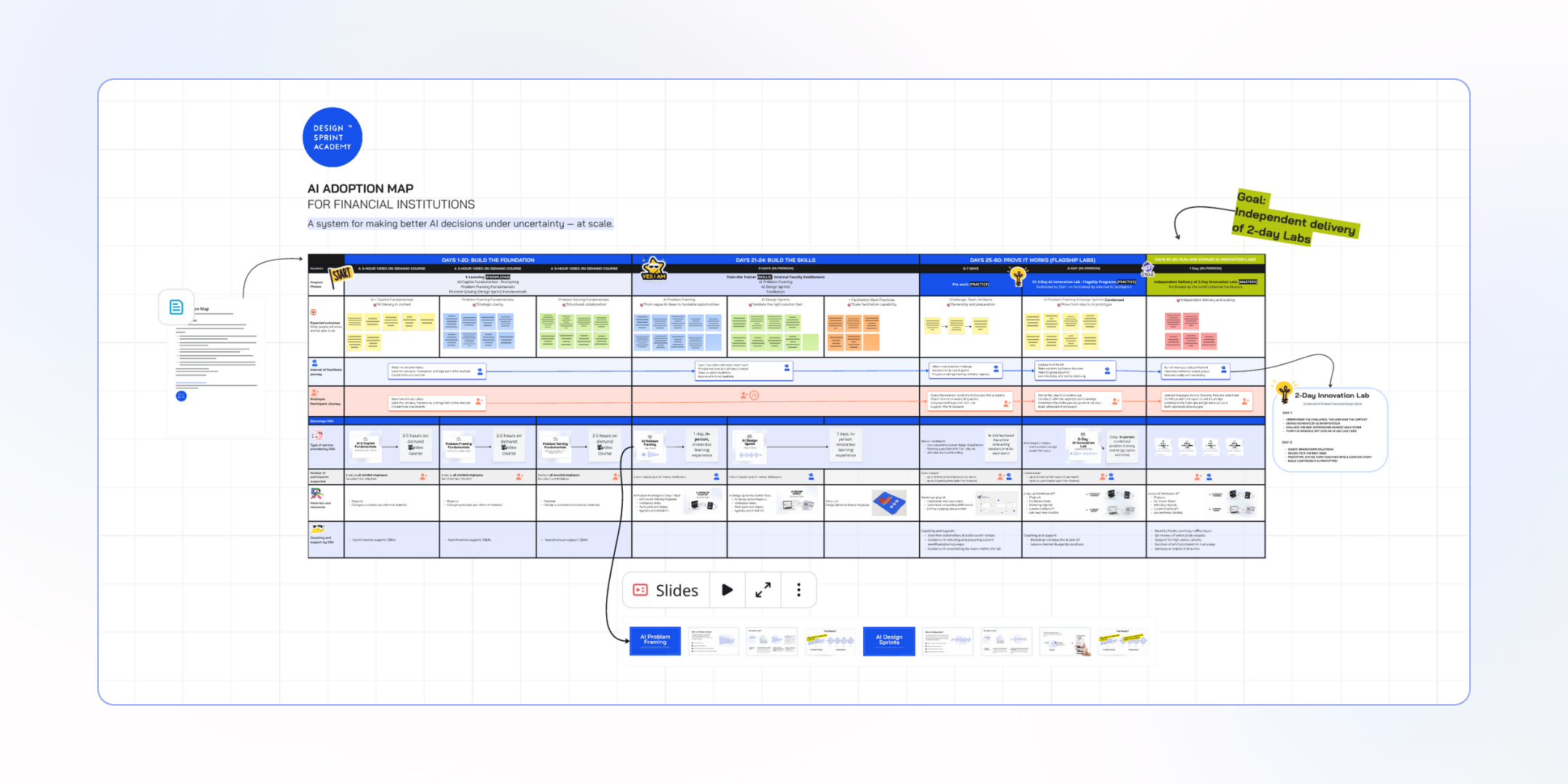

At Design Sprint Academy, we treat the AI Lab as operating infrastructure built on three building blocks:

✔️ AI Discovery Pods to create focus

✔️ AI Facilitators to run the thinking and decision work

✔️ 2-Day AI Labs to create a repeatable cadence

Together, they turn scattered pilots into a governed pipeline of outcomes.

1 — AI Discovery Pods

Most organizations try to run AI like normal delivery: Business writes requirements → Product scopes → Engineering builds → Risk reviews → Launch.

That order breaks with AI because the unknowns show up early.

- Data limits appear before the use case is even clear.

- Legal questions surface the moment real content gets involved.

- Trust problems show up before anything ships.

- Adoption can fail even when the model “works.”

AI doesn’t move in a straight line. It moves through collisions between workflow, data, risk, and human judgment.

That’s why the Lab needs pods.

A Discovery Pod is a small, cross-functional team assembled around one AI opportunity to answer the hard questions early—together.

Pod vs. CoE

A Center of Excellence is usually permanent and centralized.

A pod is temporary and specific. You spin it up around a problem, get to clarity fast, and disband once the decision is made.

Pods keep the Lab from turning into either:

- a backlog of ideas

- a slow approval funnel

Typical pod composition

A pod usually includes:

- Business owner accountable for outcomes

- Product lead focused on workflow fit and adoption

- AI/data lead assessing feasibility and evaluation

- Risk/compliance partner involved from the start

- Security or data governance rep setting safe boundaries

- Ops/change voice grounded in how work actually happens

This is the missing “connective tissue” in many AI efforts.

The pod’s job: clarity before build

The pod’s job isn’t to build an agent. It’s to answer four questions:

- What workflow is breaking today?

- What measurable outcome would change if we fix it?

- What data do we need, and is it usable?

- What risks are non-negotiable?

If you can’t answer these, you don’t have a use case. You have a hope.

Pods turn hope into a decision you can defend.

2 — AI Facilitators (the clarity builders)

Pods don’t run themselves. Even with the right people in the room, you don’t automatically get clear thinking. Cross-functional work needs design. It needs someone responsible for how the group thinks, not just what the group produces.

That’s the AI Facilitator.

An AI Facilitator helps teams make confident decisions about AI—fast, responsibly, and with evidence.

They don’t “own the answer.” They own the conditions that make good decisions possible:

- the right people in the room

- constraints surfaced early

- the right questions asked before anyone builds

- the discipline to drop weak ideas quickly

Who does this in real companies?

The title varies. In practice, AI Facilitators are often:

- innovation leads

- agile coaches

- design sprint or workshop facilitators

- transformation consultants

- product or service design operators

What matters is the skill set: clear communication across disciplines, comfort with ambiguity, credibility with senior stakeholders, and a bias toward evidence.

What they do inside the Lab

They replace weeks of scattered meetings with structured workshops. They:

- align leadership on what AI should do for the business

- assemble and onboard AI Discovery Pods at the right time

- frame use cases around real workflows and customer problems

- run short learning cycles (the 2-day workshops inside the AI Lab)

- make sure every Lab ends with a decision: build, pause, or kill

AI Labs don’t need more activity. They need more clarity.

3 — The 2-Day AI Lab (Workshops)

Collective intelligence doesn’t show up because you booked a room. It shows up when the conversation is designed.

A 2-Day AI Lab is a repeatable workshop format, led by AI Facilitators, that takes a pod from a vague leadership mandate to a customer-tested solution.

Each day has a different goal, a different pace, and a different way of thinking. Treating them as the same workshop is how teams end up with either endless discussion or a rushed prototype nobody trusts.

- Day 1: decide what to pursue (and why)

- Day 2: test how it could work (and whether it should)

At Design Sprint Academy, we run this as a condensed version of two methods: AI Problem Framing (Day 1) and an AI Design Sprint (Day 2).

Because pods are time-boxed and people have day jobs, we redesigned the full methods into a tighter sequence that still produces decision-grade outputs.

Day 1 — Condensed AI Problem Framing

Day 1 turns a broad “AI opportunity” into something specific enough to judge.

The pod:

- maps the current workflow and names the bottleneck

- defines the user and the moment that matters

- surfaces data limits, system dependencies, and risk boundaries early

- agrees on what success would change, in measurable terms

The output is a defined use case the business can rank, fund, or drop.

Day 2 — Condensed AI Design Sprint

Day 2 turns the use case into evidence.

The pod:

- generates solution options and picks one direction

- builds a lightweight prototype (often with AI-assisted tools)

- tests it with real users or frontline stakeholders

- ends with a call: build, pause, or kill

The result isn’t a demo for applause. It’s a decision-grade artifact: what we tested, what we learned, what constraints matter, and what happens next.

When you can scale this

Once you have:

- Discovery Pods as the unit of progress

- AI Facilitators as the operators of the decision work

- 2-Day Labs as the cadence

…you can run AI exploration across the business without losing control.

Success metrics for an AI Lab

Strong AI Labs don’t count activity. They track speed to clarity and quality of decisions:

✔️ time from idea intake to decision

✔️ share of weak ideas killed early

✔️ number of lab-ready opportunities produced

✔️ governance agreement reached up front

✔️ workflow readiness for adoption

A healthy AI Lab kills more ideas than it ships. That’s how you stay focused—and ship what people will actually use.

.png)