How to build a scalable AI Adoption System (in 90 Days)

In 2026, large organizations are trying to speed up AI adoption while their own CEOs describe the world as “unstable by default.” Economic swings, geopolitical shocks, and rapid shifts in AI capability shape day-to-day decisions at the top.

At the same time, leadership teams are expected to deliver more: higher productivity, faster growth, and measurable efficiency gains from AI. The ambition is clear. The conditions are not.

This matters because AI exploration doesn’t happen in isolation. It happens inside organizations that are already operating at capacity.

Over the past few years, many companies flattened their structures to move faster. Fewer layers. Decisions pushed closer to teams. In theory, that should mean speed and autonomy.

In practice, complexity grew faster than leadership capacity.

As AI moves into products, services, and internal workflows, the work of interpretation, prioritization, and risk management didn’t disappear. It shifted. Fewer managers are now expected to translate strategy into priorities, define what’s worth exploring, and make sense of fast-moving technology—often without new decision tools or more time.

So you get a familiar loop:

- Teams are told to “explore AI” while still delivering the existing roadmap.

- Expectations rise faster than clarity around ownership, success criteria, and boundaries.

- Senior leaders remain accountable for outcomes while being pulled into decisions they can’t fully absorb.

That pressure doesn’t stay at the top. It spreads.

When AI initiatives launch without redesigning how decisions are made, how work gets prioritized, or how experimentation fit into delivery, organizations rely on people to compensate.

Teams work around unclear mandates. Managers absorb tradeoffs informally. Culture becomes the first place overload, hesitation, and fatigue show up.

That’s why many AI efforts stall → because the organization isn’t built to carry ongoing exploration on top of ongoing execution.

So, what can you do?

You need a way to keep learning across the org while still running at full speed—without turning everyone’s week into a string of workshops and side quests.

That’s where AI Innovation Labs come in.

From ad-hoc exploration to designed exploration

Right now, AI exploration is often improvised.

Different teams run different meetings, follow different assumptions, and reach very different outcomes—even when tackling similar problems. Some are tied to strategy, others drift. Some get dominated by senior voices, others by whoever talks the longest. Some bring the right mix of expertise in the room; others don’t. Some make deliberate decisions; others go with gut feel.

The result:

- inconsistent decision quality

- duplicated effort

- and no shared learning across the organization

That’s exploration by coincidence. And it won’t hold over time.

AI Innovation Labs replace improvisation with designed exploration (a repeatable way to explore). How? By fixing how the work runs.

Each lab uses the same structure, aims for the same type of decision, and is guided by a trained AI Facilitator. Who joins the lab changes based on the problem.

What stays consistent:

- a fixed 2-day format

- a clear outcome: a user-tested AI solution

- facilitation skills that stay inside the company

- and a disciplined way to surface opportunities and tradeoffs early

That’s how exploration becomes an organizational muscle instead of a string of one-offs.

What is an AI Innovation Lab?

An AI Innovation Lab is a 2-day, tightly facilitated exploration sprint, run by an internal AI Facilitator and supported by a temporary, cross-functional pod assembled around a leadership-vetted AI opportunity.

It combines AI Problem Framing with a condensed AI Design Sprint to move from a fuzzy AI opportunity to a concrete, testable answer —fast.

The output is not a deck or a demo. It’s a user-tested AI solution: grounded in a real problem, tied to a business goal, and clear enough to either move forward—or stop.

Over time, you get a shared way of thinking and deciding about AI—across teams, not stuck inside silos.

But how do you go from “a lab would be great” to a lab that actually runs?

Here’s an example proposal we use with financial institutions.

90 days to build an AI adoption system

An AI adoption map for financial institutions

At Design Sprint Academy, we don’t treat AI adoption as a single initiative. We treat it as a system: something that builds capability, produces outcomes, and still works when regulation, risk, and delivery reality show up.

For financial institutions, that system has to do three things at once:

- build internal facilitation capability

- produce user-tested AI solutions

- work inside governance, risk, and delivery constraints

Here’s how we roll it out in 90 days.

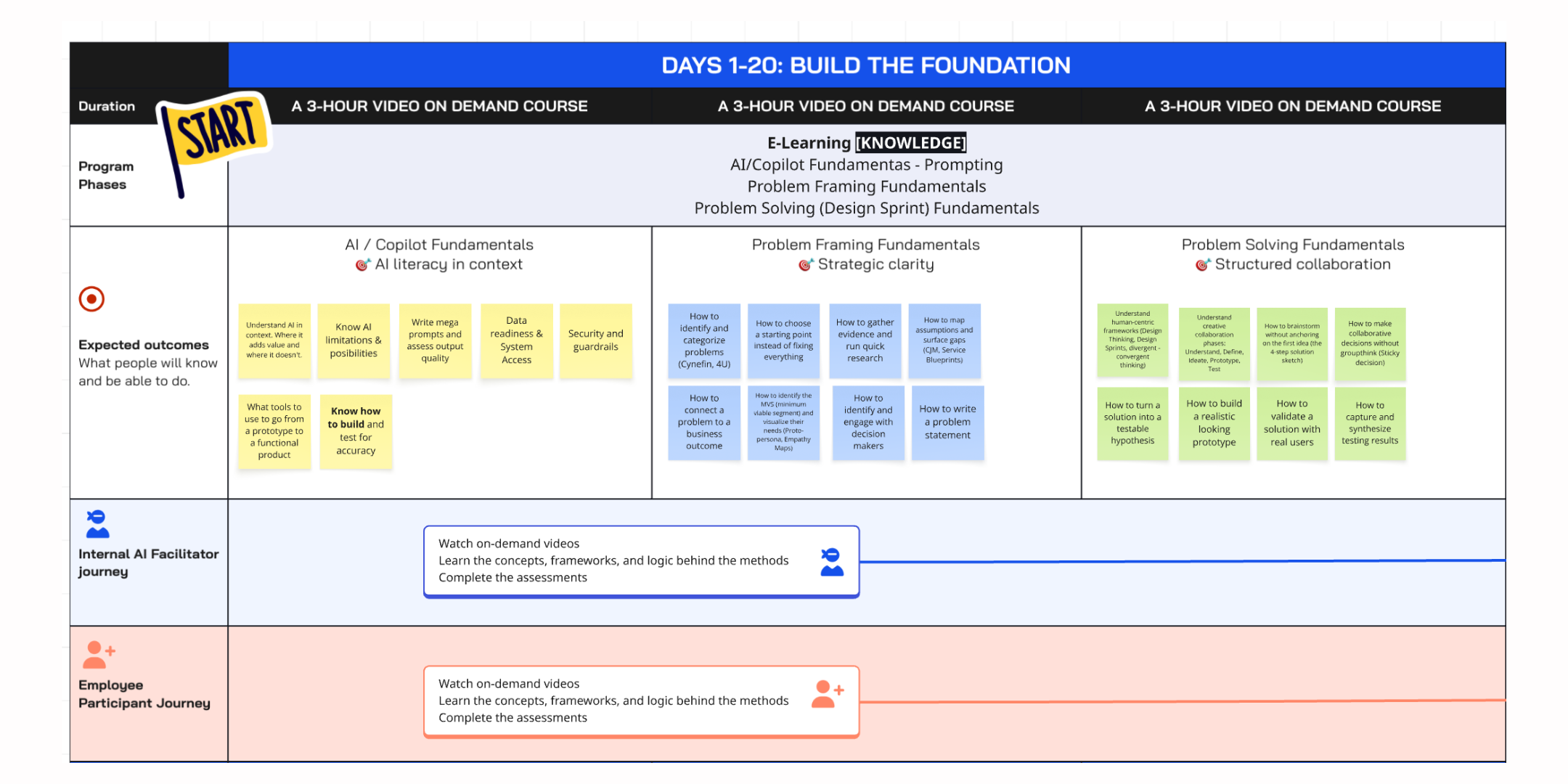

Phase 1 (Days 1–20): Build the foundation

Goal: shared language, shared judgment, and the conditions for repeatable exploration

The first 20 days are about understanding before execution.

In large orgs AI work often stalls because a small group “gets it,” while everyone else receives scattered messages and mixed expectations. Phase 1 is designed to prevent that.

We start by creating a shared baseline across the organization.

AI champions and a broader set of employees go through the same foundation. Not to turn everyone into AI specialists—just to make sure people speak the same language when AI conversations begin.

What happens in Phase 1

Everyone completes a short learning path that fits around their day job:

- AI / copilot fundamentals - Where AI helps, where it doesn’t, and what limits matter in regulated settings.

- Problem framing fundamentals -How to move from a vague “we should use AI” idea to a business-relevant opportunity you can fund.

- Problem solving fundamentals (design sprint basics) - How to structure collaboration, compare solution approaches, and judge bets when there’s uncertainty.

This foundation is shared across roles so people leave with:

✅ a realistic picture of what AI can and can’t do

✅ a clearer link between AI work and business outcomes

✅ a common vocabulary for opportunities, risks, and tradeoffs

In parallel, we identify a smaller group of AI champions—often product leaders, innovation managers, designers or transformation roles who already have trust across the bank.

For them, Phase 1 is the start of the AI facilitator path. They don’t just learn the content. They’re assessed on whether they can use the methods and decision logic they’ll later run in labs.

Phase 2 (Days 21–24): Build the skills

Goal: turn AI champions into confident AI facilitators.

Phase 1 creates shared language and shared judgment. Phase 2 switches from knowing to doing.

Over three in-person days, AI champions go through a cohort-based train-the-trainer experience focused on problem framing and design sprint facilitation.

At DSA, our test is simple: Can these people run high-stakes AI exploration sessions on their own, in a regulated environment?

What happens in Phase 2

The training has two connected parts, using real business challenges as the material.

Day 1: AI Problem Framing Training (in person) Champions learn to facilitate problem-first exploration:

- move from vague “we should use AI” ideas to fundable opportunities

- define Minimum Viable Segments (MVS)

- surface assumptions, risks, and constraints early

- write use cases decision-makers can say yes/no to

Days 2–3: condensed AI Design Sprint Training (in person) Champions learn to guide teams from decision to validation:

- structure group decisions under time pressure

- run ideation, convergence, and prioritization without losing the room

- build and test lightweight AI prototypes

- turn user feedback into a clear next step

Alongside the methods, we train the mechanics that usually break workshops:

- managing senior stakeholders and group dynamics

- handling conflict, ambiguity, and disagreement without stalling

- making tradeoffs explicit between ambition, feasibility, and risk

- keeping teams on the problem, not the demo

Participants practice facilitation in a safe setting, observe expert facilitators, and get direct feedback.

Tools and assets provided

To make sure facilitators can run labs after training, we provide complete facilitation stacks:

- AI problem framing kit - Playbook, facilitation slides, worksheets, agendas, and checklists.

- Design sprint kit - Playbook, facilitation slides, worksheets, agendas, and checklists.

The point is consistent quality. Quality shouldn’t depend on personality or prior experience.

Phase 3 (Days 25–60): Run flagship labs

Goal: produce user-tested AI solutions and prove the system holds in real conditions

Phase 3 is where the model meets the bank’s constraints: real stakeholders, real delivery pressure, real governance.

During this phase, we run three flagship AI Innovation Labs. They become reference cases for what “good exploration” looks like going forward.

Pre-work (5–7 days): set the labs up for success

Internal AI facilitators—supported by DSA coaching—own the prep work:

- select leadership-vetted business challenges

- recruit the right participants for each AI discovery pod

- prepare the challenge brief, artifacts, and logistics

- confirm data access, risk, compliance, and constraints early

This is not admin work. It’s the difference between a decision room and a brainstorming session. Meaning that AI facilitators need to be intentional about what challenge they bring into the lab, how aligned is that challenge with the org strategic objectives, what senior stakeholder will take on the role of the decision-maker, what context is needed for the team to best assess the current situation as well as past attempts in solving the problem. All these need to be prepared ahead of time.

Internal AI Facilitators will also select the right participants who need to be part of the AI Discovery Pods and onboarded before day one, so that:

- they understand the context

- they bring real workflows

- they arrive ready to work, not watch

The flagship labs: 3 × 2-day AI Innovation Labs

Each lab follows the same designed format:

- condensed AI problem framing

- condensed design sprint flow

- clear decision points

- user testing built in

DSA co-facilitates with the bank’s internal facilitators.

AI Internal facilitators will:

- lead parts of the lab

- make real-time facilitation calls

- adapt to group dynamics

- learn by doing, with support

Pods:

- bring domain expertise

- explore options inside real constraints

- build lightweight, testable AI prototypes

Each lab ends with a concrete outcome:

- a user-tested AI solution

- a clear recommendation on what happens next (build, revise, or stop)

Why this phase matters

This phase builds credibility. By the end of Phase 3:

- the organization has tangible outcomes, not just activity

- facilitators have proven they can run labs in practice

- leaders see what a good decision process looks like

- delivery teams get inputs they can act on

And just as important: the bank learns what not to pursue. Killing weak ideas early is a feature (not a bug).

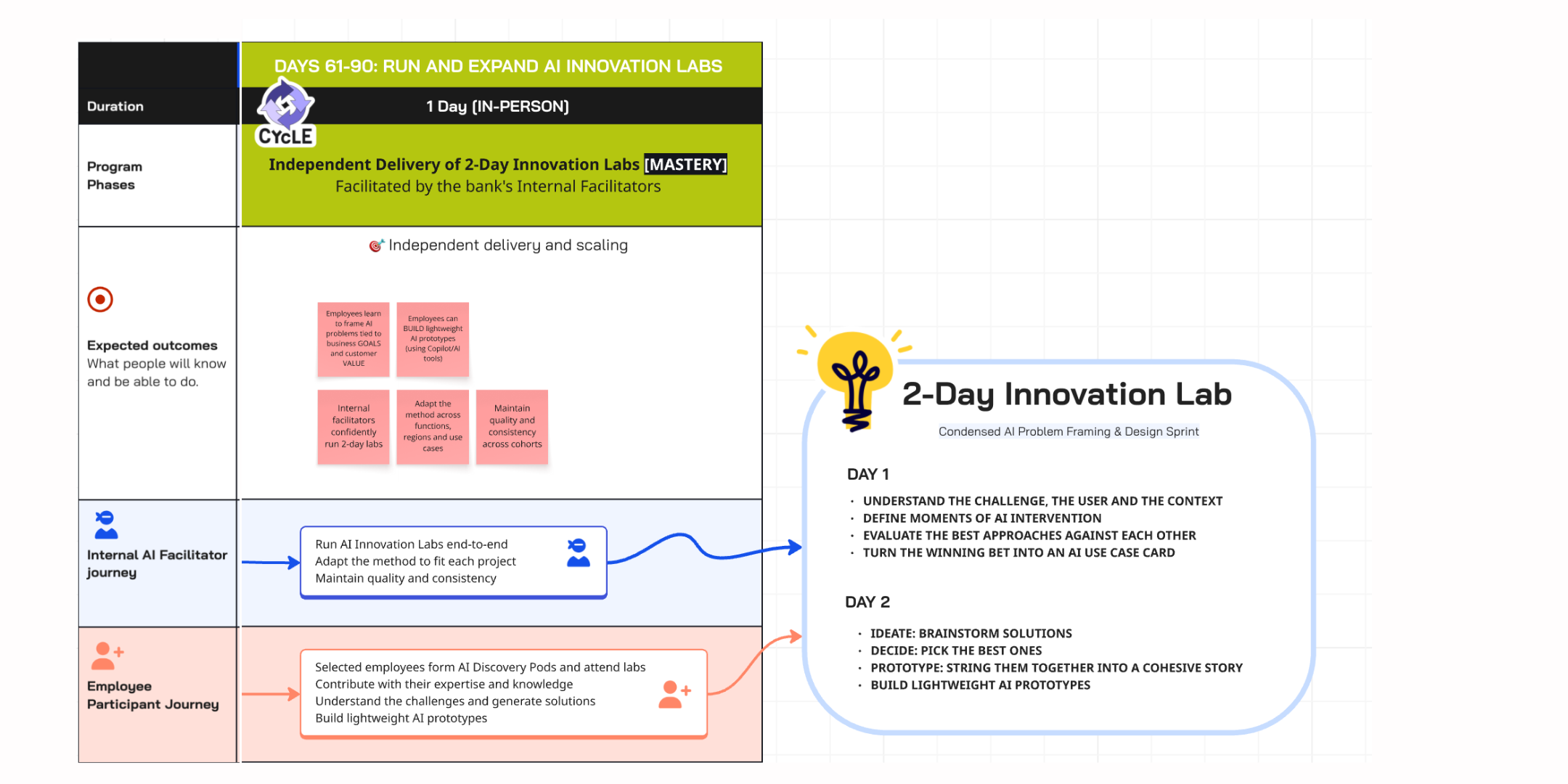

Phase 4 (Days 61–90): Run and expand AI Innovation Labs

Goal: enable internal delivery and grow exploration without losing consistency

Phase 4 is about ownership.

By now, the organization has:

- shared language and shared judgment

- trained internal AI facilitators

- proof from flagship labs

Now the system needs to run without DSA in the room—and still work.

What happens in Phase 4

Internal facilitators take full responsibility for labs end-to-end:

- select and frame new opportunities

- assemble and onboard pods

- run the full 2-day lab

- adapt the method to different business contexts

- keep the bar consistent across labs

DSA steps back from running sessions and shifts into a quality and enablement role.

Independent delivery, not “everyone does it their way”

Independence doesn’t mean improvisation.

Facilitators use the same:

- 2-day lab format

- kits and agendas

- decision artifacts and use case cards

- readiness checklists

As labs spread across functions and regions, decision quality stays steady.

Employees who join pods:

- frame problems in business terms

- bring domain detail that makes the solution real

- build lightweight prototypes inside approved tools and constraints

Exploration becomes familiar, not exceptional.

How DSA supports without taking over

DSA provides light, behind-the-scenes support:

- monthly facilitator coaching and office hours

- quality reviews of selected outputs

- support for high-stakes or sensitive cohorts

- updates to agendas and artifacts based on what teams learn

- advisory as models, copilots, and governance rules change

The system stays sharp without creating dependence.

Why this phase matters

Phase 4 is where adoption becomes durable.

By day 90:

- AI Innovation Labs run inside the bank

- facilitators can handle new contexts without losing the method

- employees know what good exploration looks like

- leaders have a repeatable way to explore, test, and decide

AI is no longer explored through one-off pilots. It’s explored through a designed system the organization owns.

Want the AI Adoption Map?

If your org bought Copilot licenses, ran training, and now people know how to write a prompt—good. That’s the entry point.

If you’re past rollout, the questions change:

- Where are the real AI opportunities inside day-to-day work?

- How do we frame them so they’re fundable and within risk limits?

- How do we test what’s worth pursuing before it becomes a project?

Not “how do we use Copilot.”

How do we think, decide, and prioritize with AI.

That’s what the AI Adoption Map is for.

Request it below and we’ll email you the Miro board.

.png)

.jpg)